Neural Network: Logistic Regression

In this post, I'll deal with logic regression and its implementation of a single neuron network. I hope you have read my previous post on mattresses and its operation using NumPy package. You can click here to go to my previous post

Logistic regression basically computes the probability of the output to be one. For example, if we train our Logistic model to recognize the image of a dog then for any new image the model basically try to calculate the probability of whether the new image is a dog or not. the higher the probability will imply that the given image is of a dog.

A neuron is the primary and fundamental unit of computation for any neural network. A neuron will receive a vector that will include the input features. Each of the features will get multiplied with their corresponding weights and then a bias will be added to each of the features after which the weighted sum will be calculated. This will produce a linear output from the previous step. After processing the linear output, data will have to pass through the activation function.

Activation Function

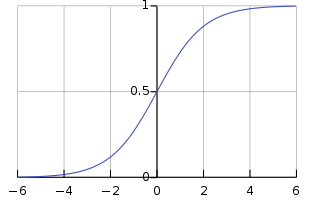

An activation function is a non-linear transformation which decides the probability of the linear input. Its output resides from 0 to 1 or from -1 to +1. This function helps in the mapping of values between a certain range which for us is between 0 and 1. For this post, I will use the Sigmoid function as the activation function. The Sigmoid function can be represented by the following equation

The sigmoid function produces an S-shaped spanning across left and right plane. As per Wikipedia Sigmoid function is a bounded differentiable and a real function having a non-negative derivative at each.

Source: Wikipedia

Weights and Biases

Weights and biases are responsible to adjust the input function which consists of features in order to bring the final output close to the actual output. Weights are multiplied with the features and then the bias component is added. The output has been passed to the activation function. Recently added from StackOverflow an interesting thing about the bias. As the name suggests bias means to favour something irrespective of whether that thing is right or wrong. Similarly, the bias favors the actual output by adding itself to the weighted sum.

The output z will now act as an input to our sigmoid function. One thing that users must always remember is that weight is a column vector whose size is equal to the number of features in the input and bias is a scalar number. The sigmoid function will now map the value of Z between 0 and 1. The output from this sigmoid function will indicate the probability of how close the input is to the actual output.

Clearly, the above example was for a single input sample. What if we have multiple input samples? Well, the Logistic regression also works on multiple training samples. Suppose there are m training samples and each of the samples has n features then the input can be stated as M x N matrix. Let us call this matrix as X. Now we can easily perform dot product between X and the weight vector to get a linear output. The vector output will be then passed to the activation function which will result in an output vector A containing the probabilities of each training sample.

In the next post, I'll deal with the Loss function, Cost function and the implmentation of SNN (Single Neuron Network).

Casino Near Me | MapYRO

ReplyDeleteFind the best Casino 제주도 출장마사지 Near Me 남양주 출장샵 in Pine Bluff, FL, near Miami International Airport 김제 출장샵 and enjoy your favorite 계룡 출장안마 table 영천 출장안마 games, slot machines and more. Mapyro

https://saglamproxy.com

ReplyDeletemetin2 proxy

proxy satın al

knight online proxy

mobil proxy satın al

6FDZ