In this post, I'll deal with dense layer, fully connected layer and backpropagation. If you have missed my previous post click here.

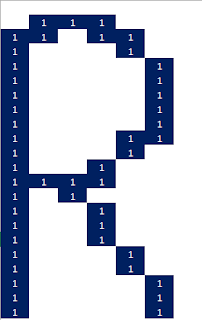

Before moving further, let us have a view on the filter's working on an image. I made a pixelated image of the letter 'R' and applied a 3 X 3 filter one time and 3 times. One must remember greater is the number of times a filter is applied on the same image, lessened will be the features. The pixelated image of the letter 'R' and the filter is below

Fig 1- Filter (Left) and the image (Right)

Fig 2- Filtering applied on image(once and twice)

One can clearly see how the filter has faded the pixels that aren't in phase with the filter. The term 'phase' seems to fit here 😊. The darkened cells are ones in phase with the filter. Even if the reference image is a bit distorted or rotated or flipped or sheared the feature will still get picked up as it will be in phase with the filter.

The process is same for the filter for calculating the filtered value. It is the sum of the products of the value of 1s and 0s with the actual cell value. The sum is then divided by the total number of cells in the filter matrix (Here 9). The value gets faded if its closer to 0 and it gets darkened if it gets closer to 1.

Rectified Linear Units ( RELU)

Now this filteres image is passed through rectification layer. We got the maximum value of cell in the below image is 0.33 and minimum value of cell as 0.11 so the middle value is 0.22. So the rule is stated as change the values of cells to 0 whose values are less than 0.22 (you can choose different values. Value closer to the maximum will be hard and fast process for the image and choosing a value closer to minimum will include some unnecessary features). So after passing the second image from Fig 2 above through the RELU layer we get an output like this

Fig 3- RELU layer applied to the filtered layer

After the application of RELU layer the image is passed through the pooling which I have already discussed in this post. So an overall process would be like this

- Convolution (Filtered)

- Relu activation

- Pooling

The above process is done once or twice or can be even greater.

What is a fully connected layer?

A fully connected layer is a single row of all the neurons connected together where every cell indicates the probability towards the actual answer. In the rectified image of 'R' darker cells have a greater value while the faded cells have a low value, thus one can conclude that the darkest cell has a greater probability to be in phase with that filter and cell having a faded value or having a lower value has quite less probability to be in phase with the filter. The above rectified image is the filtered output of a single filter and we have various filters that extract out the features so in each filter.

Now every cell value is laid down in form of an array. This process is carried out for every filter. Now an average is taken among every dark cell. Similarly average is also taken for every faded cell. These average indicate the probability.

The average of the darkest cells from each filter shows the probability of how close is our image (to 'R' here). On the contrary the average of all the lighter cells from each filter shows the probability of how far is our image (from 'R' here)

Fig 4- Fully Connected Array of all neurons (cells)

Like the above image when all arrays obtained from each filter is connected we get our fully connected layer.

What is a dense layer?

A dense layer is just another name of fully connected layer. Similar operations take place in dense layer where every neuron is connected with each other. It is also called dense because it represents a dense connection of dense neurons. A dense layer has weights associated with every neuron pair and with unique values. Generally in Keras you may notice dense layer when working with CNN while in Tensorflow you may find fully_connected. So do not get confused with this. In keras we often use dense as Dense(10), Dense(1). Here every neuron among 10 neurons is connected with the last neuron and with unique weights. Since this is too dense and I don't think it's harmful to call it a dense layer. 😉

What is a dropout layer?

As per the name suggests, it dropouts or better say, it eliminates some of the activated cells (cells passed through activation layer). This has to be done in order to prevent over-fitting. An over-fit network will not be able to distinguish features from different image of the same object. The CNN has to work within a robust environment hence dropout becomes necessary. Dropout is basically chosen between 0.2 to 0.8. Dropout removes the neurons randomly based on the parameters provided by the user like 0.4 etc.

Consider an example where you have 20 cookies and 8 of em are halftimes. An overfit network will only recognize the fully circle cookies. A fledgling CNN will pick up almost every cookie among the 8. With dropout some cookies which are either circular or halftimes will be dropped a.k.a removed randomly and retrained. This increases the quality of the network.

Cheers, See ya all soon.

No comments:

Post a Comment